LPR

1 Overview¶

1.1 Background Introduction¶

The open-source license plate recognition algorithm comes from the LPRNet_Pytorch repository. This algorithm currently supports the recognition of Chinese license plates such as blue and green plates simultaneously. Repository address:

https://github.com/sirius-ai/LPRNet_Pytorch

Model download address:

https://github.com/sirius-ai/LPRNet_Pytorch/blob/master/weights/Final_LPRNet_model.pth

1.2 Usage Instructions¶

The Linux SDK-alkaid comes with pre-converted offline models and board-side examples by default. The relevant file paths are as follows:

-

Board-side example program path

Linux_SDK/sdk/verify/opendla/source/ocr/lpr -

Board-side offline model path

Linux_SDK/project/board/${chip}/dla_file/ipu_open_models/ocr/lprnet_94x24.img -

Board-side test image path

Linux_SDK/sdk/verify/opendla/source/resource/license_plate.jpg

If the user does not need to convert the model, they can directly skip to section 3.

2 Model Conversion¶

2.1 onnx Model Conversion¶

-

Setting up the Python environment

$conda create -n lpr python==3.10 $conda activate lpr $git clone https://github.com/sirius-ai/LPRNet_Pytorch.git $cd LPRNet_PytorchNote: The Python environment setup provided here is only for reference; please refer to the official source code running tutorial for specific setup processes: https://github.com/sirius-ai/LPRNet_Pytorch/blob/master/README.md

-

Model testing

- Run the model testing script to ensure the lpr environment is configured correctly.

$python test_LPR.py

- Run the model testing script to ensure the lpr environment is configured correctly.

-

Model export

-

Install dependency packages

$pip install onnx -i https://pypi.tuna.tsinghua.edu.cn/simple $pip install onnx-simplifier -i https://pypi.tuna.tsinghua.edu.cn/simple -

Write the model conversion script

export.py:from data.load_data import CHARS, CHARS_DICT, LPRDataLoader from PIL import Image, ImageDraw, ImageFont from model.LPRNet import build_lprnet # import torch.backends.cudnn as cudnn from torch.autograd import Variable import torch.nn.functional as F from torch.utils.data import * from torch import optim import torch.nn as nn import numpy as np import argparse import torch import time import cv2 import os import onnx from onnxsim import simplify def get_parser(): parser = argparse.ArgumentParser(description='parameters to train net') parser.add_argument('--img_size', default=[94, 24], help='the image size') parser.add_argument('--test_img_dirs', default="./data/test", help='the test images path') parser.add_argument('--dropout_rate', default=0, help='dropout rate.') parser.add_argument('--lpr_max_len', default=8, help='license plate number max length.') parser.add_argument('--test_batch_size', default=1, help='testing batch size.') parser.add_argument('--phase_train', default=False, type=bool, help='train or test phase flag.') parser.add_argument('--num_workers', default=8, type=int, help='Number of workers used in dataloading') parser.add_argument('--cuda', default=True, type=bool, help='Use cuda to train model') parser.add_argument('--show', default=False, type=bool, help='show test image and its predict result or not.') parser.add_argument('--pretrained_model', default='./weights/Final_LPRNet_model.pth', help='pretrained base model') args = parser.parse_args() return args def collate_fn(batch): imgs = [] labels = [] lengths = [] for _, sample in enumerate(batch): img, label, length = sample imgs.append(torch.from_numpy(img)) labels.extend(label) lengths.append(length) labels = np.asarray(labels).flatten().astype(np.float32) return (torch.stack(imgs, 0), torch.from_numpy(labels), lengths) def test(): args = get_parser() lprnet = build_lprnet(lpr_max_len=args.lpr_max_len, phase=args.phase_train, class_num=len(CHARS), dropout_rate=args.dropout_rate) device = torch.device("cuda:0" if args.cuda else "cpu") lprnet.to(device) print("Successful to build network!") # load pretrained model if args.pretrained_model: lprnet.load_state_dict(torch.load(args.pretrained_model)) print("load pretrained model successful!") else: print("[Error] Can't found pretrained mode, please check!") return False test_img_dirs = os.path.expanduser(args.test_img_dirs) test_dataset = LPRDataLoader(test_img_dirs.split(','), args.img_size, args.lpr_max_len) try: Greedy_Decode_Eval(lprnet, test_dataset, args) finally: cv2.destroyAllWindows() def Greedy_Decode_Eval(Net, datasets, args): # TestNet = Net.eval() epoch_size = len(datasets) // args.test_batch_size batch_iterator = iter(DataLoader(datasets, args.test_batch_size, shuffle=True, num_workers=args.num_workers, collate_fn=collate_fn)) Tp = 0 Tn_1 = 0 Tn_2 = 0 t1 = time.time() for i in range(epoch_size): # load train data images, labels, lengths = next(batch_iterator) start = 0 targets = [] for length in lengths: label = labels[start:start+length] targets.append(label) start += length targets = np.array([el.numpy() for el in targets]) imgs = images.numpy().copy() if args.cuda: images = Variable(images.cuda()) else: images = Variable(images) # forward prebs = Net(torch.tensor(images)) input_names = ['images'] output_names = ['output'] torch.onnx.export( Net, args=(images), f='./opendla/lprnet.onnx', input_names=input_names, output_names=output_names, export_params=True, opset_version=13) model = onnx.load('./opendla/lprnet.onnx') model_simp, check = simplify(model) export_name = './opendla/lprnet_sim.onnx' onnx.save(model_simp, export_name) exit(1) if __name__ == "__main__": test() -

Run the model conversion script

$python export.py

-

2.2 Offline Model Conversion¶

2.2.1 Pre & Post Processing Instructions¶

-

Preprocessing

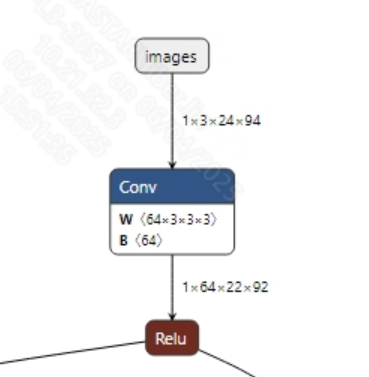

The input information for the successfully converted lpr_sim.onnx model is shown in the image below. The required input image size is (1, 3, 94, 24). Additionally, the pixel values need to be normalized to the range [0, 1].

-

Postprocessing

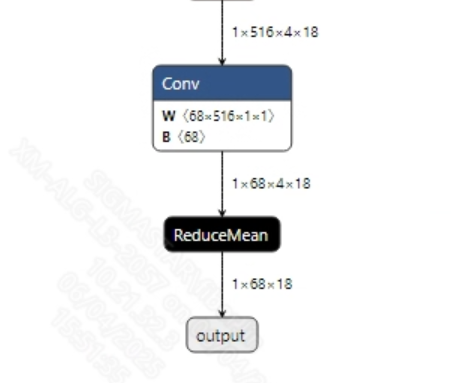

The open-source demo provided here is only used to evaluate the model inference time, so postprocessing is not provided. The model output information is shown in the image below.

2.2.2 Offline Model Conversion Process¶

Note: 1) OpenDLAModel corresponds to the smodel files extracted from the compressed package image-dev_model_convert.tar. 2) The conversion command needs to be run in the Docker environment; please load the SGS Docker environment according to the Docker development environment tutorial first.

-

Copy the onnx model to the conversion code directory

$cp opendla/lprnet_sim.onnx OpenDLAModel/ocr/lpr/onnx -

Conversion command

$cd IPU_SDK_Release/docker $bash run_docker.sh # Enter the OpenDLAModel directory in the docker environment $cd /work/SGS_XXX/OpenDLAModel $bash convert.sh -a ocr/lpr -c config/ocr_lpr -p SGS_IPU_Toolchain (absolute path) -s false -

Final generated model address

output/${chip}_${time}/lprnet_sim_94x24.img output/${chip}_${time}/lprnet_sim_94x24_fixed.sim output/${chip}_${time}/lprnet_sim_94x24_float.sim

2.2.3 Key Script Parameter Analysis¶

- input_config.ini

[INPUT_CONFIG]

inputs = input; # ONNX input node name, separate with commas if there are multiple;

training_input_formats = RGB; # Input format during model training, usually RGB;

input_formats = BGRA; # Board input format, can choose BGRA or YUV_NV12 as appropriate;

quantizations = TRUE; # Enable input quantization, no need to change;

mean_red = 127.5; # Mean value, related to model preprocessing, configure according to actual situation;

mean_green = 127.5; # Mean value, related to model preprocessing, configure according to actual situation;

mean_blue = 127.5; # Mean value, related to model preprocessing, configure according to actual situation;

std_value = 128; # Standard deviation, related to model preprocessing, configure according to actual situation;

[OUTPUT_CONFIG]

outputs = output; # ONNX output node name, separate with commas if there are multiple;

dequantizations = FALSE; # Whether to enable dequantization, fill in according to actual needs, recommended to be TRUE. Set to False, output will be int16; set to True, output will be float32;

- ocr_lpr.cfg

[LPR]

CHIP_LIST=pcupid # Platform name, must match board platform, otherwise model will not run

Model_LIST=lprnet_sim # Input ONNX model name

INPUT_SIZE_LIST=94x24 # Model input resolution

INPUT_INI_LIST=input_config.ini # Configuration file

CLASS_NUM_LIST=0 # Just fill in 0

SAVE_NAME_LIST=lprnet_sim_94x24.img # Output model name

QUANT_DATA_PATH=quant_data # Quantization image path

2.3 Model Simulation¶

-

Get float/fixed/offline model output

$bash convert.sh -a ocr/lpr -c config/ocr_lpr -p SGS_IPU_Toolchain (absolute path) -s trueAfter executing the above command, the tensor output of the

floatmodel will be saved as a txt file in theocr/lpr/log/outputpath by default. Additionally, theocr/lpr/convert.shscript also provides simulation examples forfixedandoffline; users can uncomment code blocks to obtainfixedandofflinemodel outputs during runtime. -

Model Accuracy Comparison

Under the condition that the input is the same as the above model, enter the environment built in section 2.1, and add a print statement at line 106 in the

LPRNet_Pytorch/test_LPRNet.pyfile: print(prebs) Obtain the output tensor corresponding to the Pytorch model node, and compare it with the float, fixed, and offline models. Additionally, it is important to note that the output format of the original model isNCHW, while the output formats of the float/fixed/offline models areNHWC.

3 Board-Side Deployment¶

3.1 Program Compilation¶

Before compiling the example program, you need to first select the deconfig for SDK full-package compilation based on the board (nand/nor/emmc, DDR model, etc.), which can be referenced in the alkaid SDK sigdoc document titled "Development Environment Setup."

-

Compile the board-side lpr example. $cd sdk/verify/opendla $make clean && make source/ocr/lpr -j8

-

Final generated executable file address sdk/verify/opendla/out/${AARCH}/app/prog_ocr_lpr

3.2 Running Files¶

When running the program, the following files need to be copied to the board:

- prog_ocr_lpr

- license_plate.jpg

- lprnet_94x24.img

3.3 Running Instructions¶

-

Usage:

./prog_ocr_lpr -i image -m model [-t threshold](execution file usage command) -

Required Input:

- image: image folder/path of a single image

- model: path to the offline model to be tested

-

Optional Input:

- threshold: detection threshold (0.0~1.0, default is 0.5)

-

Typical Output:

./prog_ocr_lpr -i license_plate.jpg -m models/lprnet_94x24.img inputs: license_plate.jpg model path: models/lprnet_94x24.img threshold: 0.500000 client [1016] connected, module:ipu unknown element format 7 found 1 images! the input image: ./license_plate.jpg fillbuffer processing... net input width: 94, net input height: 24 client [1016] disconnected, module:ipu model invoke time: 2.732000 ms