CLIP

1 Overview¶

1.1 Background Introduction¶

CLIP learns the mapping relationship between images and texts through training on a large number of image-text pairs. The key idea is that the feature distance between related image-text pairs is relatively close, while the feature distance between unrelated image-text pairs is far apart. Its application scenarios include zero-shot classification, image retrieval by image, and image retrieval by text.

For more details, please refer to the CLIP official documentation:

https://github.com/openai/CLIP

The download link for the open-source CLIP model is as follows:

1.2 Usage Instructions¶

The Linux SDK-alkaid includes pre-converted offline models and board examples by default. The relevant file paths are as follows:

-

Board example program path:

Linux_SDK/sdk/verify/opendla/source/vlm/clip -

Board offline model paths:

Linux_SDK/project/board/${chip}/dla_file/ipu_open_models/vlm/clip_image_encode.img Linux_SDK/project/board/${chip}/dla_file/ipu_open_models/vlm/clip_text_encode.img -

Board test image path:

Linux_SDK/sdk/verify/opendla/source/resource/retrieval_library

If users do not need to convert models, they can directly jump to section 3.

2 Model Conversion¶

2.1 ONNX Model Conversion¶

-

Python environment setup:

$conda create -n clip python==3.10 $conda activate clip $conda install --yes -c pytorch pytorch=1.7.1 torchvision cudatoolkit=11.0 $pip install ftfy regex tqdm $git clone https://github.com/openai/CLIP.git $cd CLIPNote: The provided Python environment setup is for reference only; please refer to the official source code running tutorial for the specific setup process: https://github.com/openai/CLIP/blob/main/README.md

-

Model Testing:

-

Create the opendla directory and place the downloaded models there, then write the model testing script

opendla/predict.py:import os import clip import torch from torchvision.datasets import CIFAR100 # Load the model device = "cuda" if torch.cuda.is_available() else "cpu" model, preprocess = clip.load("./opendla/RN50.pt", device) # Download the dataset cifar100 = CIFAR100(root=os.path.expanduser("~/.cache"), download=True, train=False) # Prepare the inputs image, class_id = cifar100[3637] image_input = preprocess(image).unsqueeze(0).to(device) text_inputs = torch.cat([clip.tokenize(f"a photo of a {c}") for c in cifar100.classes]).to(device) # Calculate features with torch.no_grad(): image_features = model.encode_image(image_input) text_features = model.encode_text(text_inputs) # Pick the top 5 most similar labels for the image image_features /= image_features.norm(dim=-1, keepdim=True) text_features /= text_features.norm(dim=-1, keepdim=True) similarity = (100.0 * image_features @ text_features.T).softmax(dim=-1) values, indices = similarity[0].topk(5) # Print the result print("\nTop predictions:\n") for value, index in zip(values, indices): print(f"{cifar100.classes[index]:>16s}: {100 * value.item():.2f}%")- Run the model testing script to ensure that the clip environment is configured correctly: $python ./opendla/predict.py

-

-

Model Export:

-

Install the required libraries:

$pip install onnx -i https://pypi.tuna.tsinghua.edu.cn/simple $pip install onnx-simplifier -i https://pypi.tuna.tsinghua.edu.cn/simple -

Write the model conversion script

opendla/export.py:import os,sys sys.path.append(os.getcwd()) import clip import torch.nn as nn import torch import onnx import onnxruntime import onnxsim import cv2 import numpy as np device = torch.device("cuda" if torch.cuda.is_available() else "cpu") def to_numpy(tensors): out = [] if type(tensors) == torch.tensor: tensors = [tensors] for tensor in tensors: if tensor.requires_grad: tensor = tensor.detach().cpu().numpy() else: tensor = tensor.cpu().numpy() out.append(tensor) return out class ImgModelWrapper(nn.Module): def __init__(self, clip_model_name: str, device: torch.device = "cuda" if torch.cuda.is_available() else "cpu", ): super().__init__() # check model info self.clip_model_name = clip_model_name self.device = device # load CLIP self.model = clip.load(clip_model_name)[0] self.model.float() self.model.eval() def forward(self, image): img_features = self.model.encode_image(image) img_features = img_features.mean(axis=0, keepdim=True) img_features_norm = img_features / img_features.norm(dim=-1, keepdim=True) return img_features_norm class TextModelWrapper(nn.Module): def __init__(self, clip_model_name: str, device: torch.device = "cuda" if torch.cuda.is_available() else "cpu", ): super().__init__() # check model info self.clip_model_name = clip_model_name self.device = device # load CLIP self.model = clip.load(clip_model_name)[0] self.model.float() self.model.eval() def forward(self, tokens): tokens = tokens.to(self.device).to(torch.int32) text_features = self.model.encode_text(tokens) text_features = text_features.mean(axis=0, keepdim=True) text_features_norm = text_features / text_features.norm(dim=-1, keepdim=True) return text_features_norm clip_text = TextModelWrapper("./opendla/RN50.pt", device= device) clip_img = ImgModelWrapper("./opendla/RN50.pt", device= device) text='person' text_token = clip.tokenize(text).to('cpu') text_token = text_token.split(80)[0].split(80)[0] img = torch.randn((1,3,224,224)).to('cuda') f='./opendla/clip_text_encode.onnx' with torch.no_grad(): torch.onnx.export( clip_text, text_token, f, opset_version=13, input_names=['text'], output_names=['text_feats'], do_constant_folding=False) model_onnx = onnx.load(f) model_onnx, check = onnxsim.simplify(model_onnx) onnx.save(model_onnx, f) f='./opendla/clip_img_encode.onnx' with torch.no_grad(): torch.onnx.export( clip_img, img, f, opset_version=13, input_names=['image'], output_names=['img_feats'], do_constant_folding=False) model_onnx = onnx.load(f) model_onnx, check = onnxsim.simplify(model_onnx) onnx.save(model_onnx, f) -

Run the model conversion script; this will generate the clip_img_encode.onnx and clip_text_encode.onnx models in the

opendladirectory:$python ./opendla/export.py

-

2.2 Offline Model Conversion¶

2.2.1 Pre & Post-Processing Instructions¶

-

Pre-processing

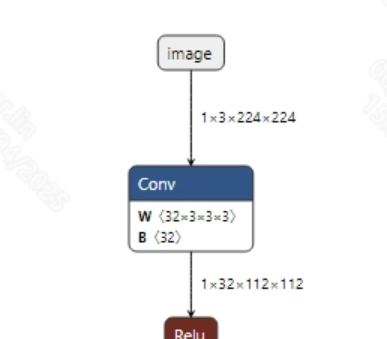

The input information for the successfully converted clip_img_encode.onnx model is shown in the figure below. The required input image size is (1, 3, 224, 224), and the pixel values need to be normalized to the range [0, 1].

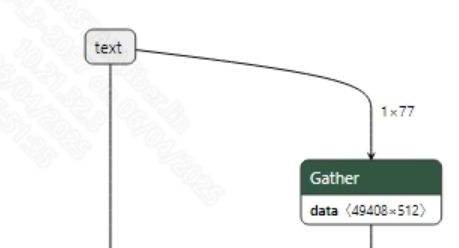

The input information for the successfully converted clip_text_encode.onnx model is shown below. The required input size is (1, 77), with no additional preprocessing needed.

-

Post-processing

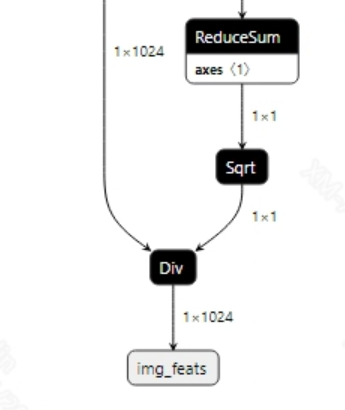

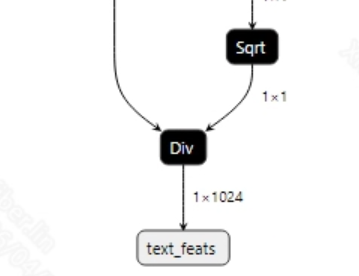

This model does not require post-processing. The output information for the clip_img_encode.onnx and clip_text_encode.onnx models is shown in the figures below.

2.2.2 Offline Model Conversion Process¶

Note: 1) OpenDLAModel corresponds to the smodel files extracted from the compressed package image-dev_model_convert.tar. 2) The conversion command needs to be run in a Docker environment; please load the SGS Docker environment according to the Docker Development Environment Tutorial.

-

Copy the ONNX models to the conversion code directory:

$cp ./opendla/clip_img_encode.onnx OpenDLAModel/vlm/clip/onnx $cp ./opendla/clip_text_encode.onnx OpenDLAModel/vlm/clip/onnx -

Conversion command:

$cd IPU_SDK_Release/docker $bash run_docker.sh # Enter the OpenDLAModel directory in the Docker environment $cd /work/SGS_XXX/OpenDLAModel $bash convert.sh -a vlm/clip -c config/vlm_clip.cfg -p SGS_IPU_Toolchain(absolute path) -s false -

Final generated model locations:

output/${chip}_${time}/clip_image_encode.img output/${chip}_${time}/clip_image_encode_fixed.sim output/${chip}_${time}/clip_image_encode_float.sim output/${chip}_${time}/clip_text_encode.img output/${chip}_${time}/clip_text_encode_fixed.sim output/${chip}_${time}/clip_text_encode_float.sim

2.2.3 Key Script Parameter Analysis¶

-

input_config_img.ini [INPUT_CONFIG] inputs = images; # ONNX input node name; separate multiple names with commas if necessary; training_input_formats = RGB; # Input format during model training, usually RGB; input_formats = BGRA; # Board input format; can choose BGRA or YUV_NV12 based on the situation; quantizations = TRUE; # Enable input quantization; do not modify; mean_red = 123.675; # Mean value related to model pre-processing; configure based on the actual situation; mean_green = 116.28; # Mean value related to model pre-processing; configure based on the actual situation; mean_blue = 103.53; # Mean value related to model pre-processing; configure based on the actual situation; std_value = 58.395:57.12:57.375; # Variance related to model pre-processing; configure based on the actual situation; [OUTPUT_CONFIG] outputs = img_feats; # ONNX output node name; separate multiple names with commas if necessary; dequantizations = TRUE; # Whether to enable dequantization; fill according to actual needs; recommended to be TRUE. Set to False for int16 output; set to True for float32 output. [OPTIMIZE_CONFIG] Light_Offline_Model=TRUE;

-

input_config_text.ini [INPUT_CONFIG] inputs = text; # ONNX input node name; separate multiple names with commas if necessary; input_formats = RAWDATA_U16_NHWC; # Board input format; can choose BGRA or YUV_NV12 based on the situation; quantizations = TRUE; # Enable input quantization; do not modify; [OUTPUT_CONFIG] outputs = TEXT_feats; # ONNX output node name; separate multiple names with commas if necessary; dequantizations = TRUE; # Whether to enable dequantization; fill according to actual needs; recommended to be TRUE. Set to False for int16 output; set to True for float32 output. [OPTIMIZE_CONFIG] optimize_layernorm_precision=TRUE; # Operator optimization optimize_Instancenorm_precision=TRUE; # Operator optimization

-

vlm_clip.cfg [CLIP] CHIP_LIST=pcupid # Platform name; must match the board platform, otherwise the model cannot run Model_LIST=clip_img_encode,clip_text_encode # Input ONNX model names INPUT_SIZE_LIST=0,0 # Model input resolutions INPUT_INI_LIST=input_config_img.ini,input_config_text.ini # Configuration files CLASS_NUM_LIST=0,0 # Just fill in 0 SAVE_NAME_LIST=clip_image_encode.img,clip_text_encode.img # Output model names QUANT_DATA_PATH=quant_data_img,quant_data_txt # Quantization image paths

2.3 Model Simulation¶

-

Obtain float/fixed/offline model output:

$bash convert.sh -a vlm/clip -c config/vlm_clip.cfg -p SGS_IPU_Toolchain(absolute path) -s trueAfter executing the above command, the output tensor of the

floatmodel will be saved to a txt file in thevlm/clip/log/outputpath by default. Additionally, thevlm/clip/convert.shscript also provides simulation examples forfixedandoffline; users can uncomment the code blocks to obtain the outputs forfixedandofflinemodels respectively. -

Model Accuracy Comparison

Under the condition that the input remains the same as above, enter the environment set up in 2.1. Add the print statement at line 25 of the

CLIP/opendla/predict.pyfile:print(image_features) print(text_features)This will allow you to obtain the output tensors corresponding to the PyTorch image model and text model nodes, enabling you to compare them with the float, fixed, and offline models. Additionally, it should be noted that the output format of the original model is

NCHW, while the formats of float/fixed/offline model outputs areNHWC.

3 Board Deployment¶

3.1 Program Compilation¶

Before compiling the example program for the board, you need to select the deconfig according to the board model (nand/nor/emmc, DDR model, etc.) for the SDK full-package compilation. For details, please refer to the Alkaid SDK SIGDOC "Development Environment Setup" document.

-

Compile the board clip example: $cd sdk/verify/opendla $make clean && make source/vlm/clip -j8

-

Final executable file address: sdk/verify/opendla/out/${AARCH}/app/prog_vlm_clip

3.2 Running Files¶

When running the program, the following files need to be copied to the board:

- prog_vlm_clip

- retrieval_library

- clip_image_encode.img

- clip_text_encode.img

3.3 Running Instructions¶

- Usage:

./prog_vlm_clip type gallery query topk imgModel textModel dict(Execution command for the file) -

Required Input:

- type: 0: text-to-image; 1: image-to-image

- gallery: Image query library

- query: Query image or text description

- topk: Number of top predictions to output

- imgModel: Path to the image offline model

- textModel: Path to the text offline model

- dict: Dictionary

-

Typical output:

Text-to-Image Search

./prog_vlm_clip 0 resource/retrieval_library/ "apple" 3 models/clip_image_encode.img models/clip_text_encode.img resource/en_vocab.txt client [863] connected, module:ipu found 5 images! text model invoke time: 27.424000 ms text data: -0.0213144 -0.0241877 -0.0107617 [0] processing resource/retrieval_library/000000177958.jpg... fillbuffer processing... net input width: 224, net input height: 224 img data: -0.000272722 0.0139259 -0.00363061 img model invoke time: 37.070000 ms [1] processing resource/retrieval_library/000000395432.jpg... fillbuffer processing... net input width: 224, net input height: 224 img data: -0.0274256 -0.00395447 0.0141475 img model invoke time: 37.052000 ms [2] processing resource/retrieval_library/000000577364.jpg... fillbuffer processing... net input width: 224, net input height: 224 img data: -0.015102 -0.0224314 -0.0334596 img model invoke time: 37.044000 ms [3] processing resource/retrieval_library/apple1.jpg... fillbuffer processing... img data: 0.0212212 0.0622829 -0.0304767 img model invoke time: 37.069000 ms [4] processing resource/retrieval_library/apple2.jpg... fillbuffer processing... net input width: 224, net input height: 224 img data: 0.0141815 0.0561978 -0.032471 img model invoke time: 37.059000 ms img model post process time: 0.980000 ms apple, the image path is resource/retrieval_library/apple2.jpg, the score is 0.243618 apple, the image path is resource/retrieval_library/apple1.jpg, the score is 0.242924 apple, the image path is resource/retrieval_library/000000577364.jpg, the score is 0.133982 ------shutdown IPU0------ client [863] disconnected, module:ipuImage-to-Image Search

/prog_vlm_clip 1 resource/retrieval_library/ resource/retrieval_library/apple1.jpg 3 models/clip_image_encode.img models/clip_text_encode.img resource/en_vocab.txt client [865] connected, module:ipu found 5 images! fillbuffer processing... img: 0.0212212 0.0622829 -0.0304767 [0] processing resource/retrieval_library/000000177958.jpg... fillbuffer processing... net input width: 224, net input height: 224 img model invoke time: 36.955000 ms [1] processing resource/retrieval_library/000000395432.jpg... fillbuffer processing... net input width: 224, net input height: 224 img model invoke time: 36.978000 ms [2] processing resource/retrieval_library/000000577364.jpg... fillbuffer processing... net input width: 224, net input height: 224 img model invoke time: 36.976000 ms [3] processing resource/retrieval_library/apple1.jpg... fillbuffer processing... img model invoke time: 36.992000 ms [4] processing resource/retrieval_library/apple2.jpg... fillbuffer processing... net input width: 224, net input height: 224 img model invoke time: 36.970000 ms img model post process time: 0.990000 ms resource/image/apple1.jpg, the image path is resource/retrieval_library/apple1.jpg, the score is 1.000000 resource/image/apple1.jpg, the image path is resource/retrieval_library/apple2.jpg, the score is 0.977727 resource/image/apple1.jpg, the image path is resource/retrieval_library/000000395432.jpg, the score is 0.325610 ------shutdown IPU0------ client [863] disconnected, module:ipu