CLIP

1 概述¶

1.1 背景介绍¶

CLIP是通过大量图像-文本对的训练来学习图像和文本之间的映射关系, 其中的关键思想是相关图文对之间的特征距离较近, 而不相关的图文对之间的特征距离较远远。其应用场景有:零样本分类、以图搜图、以文搜图等

详情可参考CLIP官方说明

https://github.com/openai/CLIP

clip开源模型下载地址如下: - RN50 - RN101 - RN50x4 - RN50x16 - RN50x64 - ViT-B/32 - ViT-B/16 - ViT-L/14 - ViT-L/14@336px

1.2 使用说明¶

Linux SDK-alkaid中默认带了已经预先转换好的离线模型及板端示例, 相关文件路径如下:

-

板端示例程序路径

Linux_SDK/sdk/verify/opendla/source/vlm/clip -

板端离线模型路径

Linux_SDK/project/board/${chip}/dla_file/ipu_open_models/vlm/clip_image_encode.img Linux_SDK/project/board/${chip}/dla_file/ipu_open_models/vlm/clip_text_encode.img -

板端测试图像路径

Linux_SDK/sdk/verify/opendla/source/resource/retrieval_library

如果用户不需要转换模型可直接跳转至第3章节。

2 模型转换¶

2.1 onnx模型转换¶

-

python环境搭建

$conda create -n clip python==3.10 $conda activate clip $conda install --yes -c pytorch pytorch=1.7.1 torchvision cudatoolkit=11.0 $pip install ftfy regex tqdm $git clone https://github.com/openai/CLIP.git $cd CLIP注意:这里提供的python环境搭建, 仅作为参考示例, 具体搭建过程请参考官方源码运行教程:

https://github.com/openai/CLIP/blob/main/README.md -

模型测试

-

创建opendla目录并将下载好的模型放到该目录下, 编写模型测试脚本

opendla/predict.pyimport os import clip import torch from torchvision.datasets import CIFAR100 # Load the model device = "cuda" if torch.cuda.is_available() else "cpu" model, preprocess = clip.load("./opendla/RN50.pt", device) # Download the dataset cifar100 = CIFAR100(root=os.path.expanduser("~/.cache"), download=True, train=False) # Prepare the inputs image, class_id = cifar100[3637] image_input = preprocess(image).unsqueeze(0).to(device) text_inputs = torch.cat([clip.tokenize(f"a photo of a {c}") for c in cifar100.classes]).to(device) # Calculate features with torch.no_grad(): image_features = model.encode_image(image_input) text_features = model.encode_text(text_inputs) # Pick the top 5 most similar labels for the image image_features /= image_features.norm(dim=-1, keepdim=True) text_features /= text_features.norm(dim=-1, keepdim=True) similarity = (100.0 * image_features @ text_features.T).softmax(dim=-1) values, indices = similarity[0].topk(5) # Print the result print("\nTop predictions:\n") for value, index in zip(values, indices): print(f"{cifar100.classes[index]:>16s}: {100 * value.item():.2f}%")- 运行模型测试脚本, 确保clip环境配置正确。

$python ./opendla/predict.py

- 运行模型测试脚本, 确保clip环境配置正确。

-

-

模型导出

-

安装依赖库

$pip install onnx -i https://pypi.tuna.tsinghua.edu.cn/simple $pip install onnx-simplifier -i https://pypi.tuna.tsinghua.edu.cn/simple -

编写模型转换脚本

opendla/export.py:import os,sys sys.path.append(os.getcwd()) import clip import torch.nn as nn import torch import onnx import onnxruntime import onnxsim import cv2 import numpy as np device = torch.device("cuda" if torch.cuda.is_available() else "cpu") def to_numpy(tensors): out = [] if type(tensors) == torch.tensor: tensors = [tensors] for tensor in tensors: if tensor.requires_grad: tensor = tensor.detach().cpu().numpy() else: tensor = tensor.cpu().numpy() out.append(tensor) return out class ImgModelWrapper(nn.Module): def __init__(self, clip_model_name: str, device: torch.device = "cuda" if torch.cuda.is_available() else "cpu", ): super().__init__() # check model info self.clip_model_name = clip_model_name self.device = device # load CLIP self.model = clip.load(clip_model_name)[0] self.model.float() self.model.eval() def forward(self, image): img_features = self.model.encode_image(image) img_features = img_features.mean(axis=0, keepdim=True) img_features_norm = img_features / img_features.norm(dim=-1, keepdim=True) return img_features_norm class TextModelWrapper(nn.Module): def __init__(self, clip_model_name: str, device: torch.device = "cuda" if torch.cuda.is_available() else "cpu", ): super().__init__() # check model info self.clip_model_name = clip_model_name self.device = device # load CLIP self.model = clip.load(clip_model_name)[0] self.model.float() self.model.eval() def forward(self, tokens): tokens = tokens.to(self.device).to(torch.int32) text_features = self.model.encode_text(tokens) text_features = text_features.mean(axis=0, keepdim=True) text_features_norm = text_features / text_features.norm(dim=-1, keepdim=True) return text_features_norm clip_text = TextModelWrapper("./opendla/RN50.pt", device= device) clip_img = ImgModelWrapper("./opendla/RN50.pt", device= device) text='person' text_token = clip.tokenize(text).to('cpu') text_token = text_token.split(80)[0].split(80)[0] img = torch.randn((1,3,224,224)).to('cuda') f='./opendla/clip_text_encode.onnx' with torch.no_grad(): torch.onnx.export( clip_text, text_token, f, opset_version=13, input_names=['text'], output_names=['text_feats'], do_constant_folding=False) model_onnx = onnx.load(f) model_onnx, check = onnxsim.simplify(model_onnx) onnx.save(model_onnx, f) f='./opendla/clip_img_encode.onnx' with torch.no_grad(): torch.onnx.export( clip_img, img, f, opset_version=13, input_names=['image'], output_names=['img_feats'], do_constant_folding=False) model_onnx = onnx.load(f) model_onnx, check = onnxsim.simplify(model_onnx) onnx.save(model_onnx, f) -

运行模型转换脚本, 会在

opendla目录下生成clip_img_encode.onnx和clip_text_encode.onnx模型$python ./opendla/export.py

-

2.2 离线模型转换¶

2.2.1 预&后处理说明¶

-

预处理

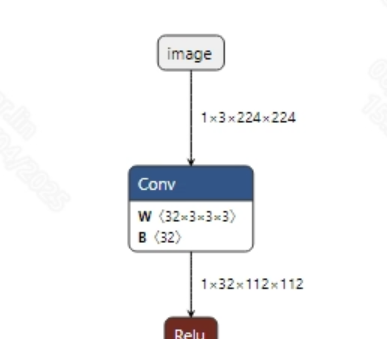

转换成功的clip_img_encode.onnx模型输入信息如下图所示, 要求输入图像的尺寸为 (1, 3, 224, 224), 此外需要将像素值归一化到 [0, 1] 范围内。

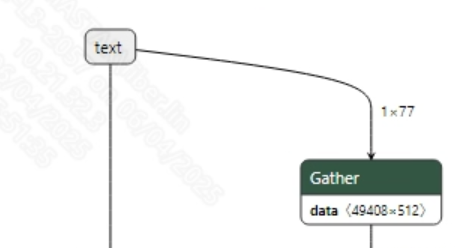

转换成功的clip_text_encode.onnx模型输入信息如下图所示, 要求输入图像的尺寸为 (1, 77), 不需要额外的前处理。

-

后处理

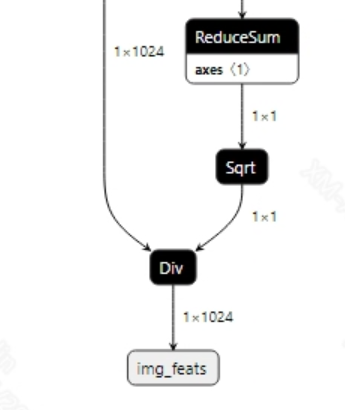

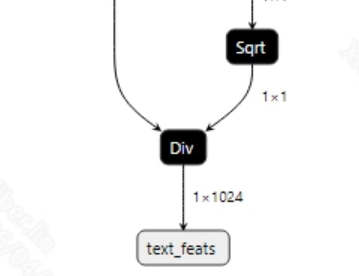

该模型不需要后处理, clip_img_encode.onnx和clip_text_encode.onnx模型的输出信息如下图所示

2.2.2 offline模型转换流程¶

注意:1)OpenDLAModel对应的是压缩包image-dev_model_convert.tar解压之后的smodel文件。2)转换命令需要在docker环境下运行, 请先根据Docker开发环境教程, 加载SGS Docker环境。

-

拷贝onnx模型到转换代码目录

$cp ./opendla/clip_img_encode.onnx OpenDLAModel/vlm/clip/onnx $cp ./opendla/clip_text_encode.onnx OpenDLAModel/vlm/clip/onnx -

转换命令

$cd IPU_SDK_Release/docker $bash run_docker.sh #进入到docker环境下的OpenDLAModel目录 $cd /work/SGS_XXX/OpenDLAModel $bash convert.sh -a vlm/clip -c config/vlm_clip.cfg -p SGS_IPU_Toolchain(绝对路径) -s false -

最终生成的模型地址

output/${chip}_${时间}/clip_image_encode.img output/${chip}_${时间}/clip_image_encode_fixed.sim output/${chip}_${时间}/clip_image_encode_float.sim output/${chip}_${时间}/clip_text_encode.img output/${chip}_${时间}/clip_text_encode_fixed.sim output/${chip}_${时间}/clip_text_encode_float.sim

2.2.3 关键脚本参数解析¶

- input_config_img.ini

[INPUT_CONFIG]

inputs = images; #onnx 输入节点名称, 如果有多个需以“,”隔开;

training_input_formats = RGB; #模型训练时的输入格式, 通常都是RGB;

input_formats = BGRA; #板端输入格式, 可以根据情况选择BGRA或者YUV_NV12;

quantizations = TRUE; #打开输入量化, 不需要修改;

mean_red = 123.675; #均值, 跟模型预处理相关, 根据实际情况配置;

mean_green = 116.28; #均值, 跟模型预处理相关, 根据实际情况配置;

mean_blue = 103.53; #均值, 跟模型预处理相关, 根据实际情况配置;

std_value = 58.395:57.12:57.375; #方差, 跟模型预处理相关, 根据实际情况配置;

[OUTPUT_CONFIG]

outputs = img_feats; #onnx 输出节点名称, 如果有多个需以“,”隔开;

dequantizations = TRUE; #是否开启反量化, 根据实际需求填写, 建议为TRUE。设为False, 输出为int16; 设为True, 输出为float32

[OPTIMIZE_CONFIG]

Light_Offline_Model=TRUE;

- input_config_text.ini

[INPUT_CONFIG]

inputs = text; #onnx 输入节点名称, 如果有多个需以“,”隔开;

input_formats = RAWDATA_U16_NHWC; #板端输入格式, 可以根据情况选择BGRA或者YUV_NV12;

quantizations = TRUE; #打开输入量化, 不需要修改;

[OUTPUT_CONFIG]

outputs = TEXT_feats; #onnx 输出节点名称, 如果有多个需以“,”隔开;

dequantizations = TRUE; #是否开启反量化, 根据实际需求填写, 建议为TRUE。设为False, 输出为int16; 设为True, 输出为float32

[OPTIMIZE_CONFIG]

optimize_layernorm_precision=TRUE; #算子优化

optimize_Instancenorm_precision=TRUE; #算子优化

- vlm_clip.cfg

[CLIP]

CHIP_LIST=pcupid #平台名称, 必须和板端平台一致, 否则模型无法运行

Model_LIST=clip_img_encode,clip_text_encode #输入onnx模型名称

INPUT_SIZE_LIST=0,0 #模型输入分辨率

INPUT_INI_LIST=input_config_img.ini,input_config_text.ini #配置文件

CLASS_NUM_LIST=0,0 #填0即可

SAVE_NAME_LIST=clip_image_encode.img,clip_text_encode.img #输出模型名称

QUANT_DATA_PATH=quant_data_img,quant_data_txt #量化图片路径

2.3 模型仿真¶

-

获取float/fixed/offline模型输出

$bash convert.sh -a vlm/clip -c config/vlm_clip.cfg -p SGS_IPU_Toolchain(绝对路径) -s true执行上述命令后, 会默认将

float模型的输出tensor保存到vlm/clip/log/output路径下的txt文件中。此外, 在vlm/clip/convert.sh脚本中也提供了fixed和offline的仿真示例, 用户在运行时可以通过打开注释代码块, 分别获取fixed和offline模型输出。 -

模型精度对比

在保证输入和上述模型相同的情况下, 进入2.1章节搭建好的环境, 在

CLIP/opendla/predict.py文件的第25行处添加打印:print(image_features) print(text_features)分别获取pytorch图像模型和文本模型对应节点的输出tensor, 进而和float、fixed、offline模型进行对比。此外需要特别注意的是, 原始模型的输出格式是

NCHW, 而float/fixed/offline模型输出的格式是NHWC。

3 板端部署¶

3.1 程序编译¶

示例程序编译之前需要先根据板子(nand/nor/emmc, ddr型号等)选择deconfig进行sdk整包编译, 具体可以参考alkaid sdk sigdoc《开发环境搭建》文档。

-

编译板端clip示例。

$cd sdk/verify/opendla $make clean && make source/vlm/clip -j8 -

最终生成的可执行文件地址

sdk/verify/opendla/out/${AARCH}/app/prog_vlm_clip

3.2 运行文件¶

运行程序时, 需要先将以下几个文件拷贝到板端

- prog_vlm_clip

- retrieval_library

- clip_image_encode.img

- clip_text_encode.img

3.3 运行说明¶

-

Usage:

./prog_vlm_clip type gallery query topk imgModel textModel dict(执行文件使用命令) -

Required Input:

- type: 0:以文搜图;1:以图搜图

- gallery: 图像查询库

- query: 查询图像或者文本描述

- tok:输出前k个预测值

- imgModel:图像offline模型路径

- textModel:文本offline模型路径

- dict:字典

-

Typical output: 以文搜图

./prog_vlm_clip 0 resource/retrieval_library/ "apple" 3 models/clip_image_encode.img models/clip_text_encode.img resource/en_vocab.txt client [863] connected, module:ipu found 5 images! text model invoke time: 27.424000 ms text data: -0.0213144 -0.0241877 -0.0107617 [0] processing resource/retrieval_library/000000177958.jpg... fillbuffer processing... net input width: 224, net input height: 224 img data: -0.000272722 0.0139259 -0.00363061 img model invoke time: 37.070000 ms [1] processing resource/retrieval_library/000000395432.jpg... fillbuffer processing... net input width: 224, net input height: 224 img data: -0.0274256 -0.00395447 0.0141475 img model invoke time: 37.052000 ms [2] processing resource/retrieval_library/000000577364.jpg... fillbuffer processing... net input width: 224, net input height: 224 img data: -0.015102 -0.0224314 -0.0334596 img model invoke time: 37.044000 ms [3] processing resource/retrieval_library/apple1.jpg... fillbuffer processing... img data: 0.0212212 0.0622829 -0.0304767 img model invoke time: 37.069000 ms [4] processing resource/retrieval_library/apple2.jpg... fillbuffer processing... net input width: 224, net input height: 224 img data: 0.0141815 0.0561978 -0.032471 img model invoke time: 37.059000 ms img model post process time: 0.980000 ms apple, the image path is resource/retrieval_library/apple2.jpg, the score is 0.243618 apple, the image path is resource/retrieval_library/apple1.jpg, the score is 0.242924 apple, the image path is resource/retrieval_library/000000577364.jpg, the score is 0.133982 ------shutdown IPU0------ client [863] disconnected, module:ipu以图搜图

/prog_vlm_clip 1 resource/retrieval_library/ resource/retrieval_library/apple1.jpg 3 models/clip_image_encode.img models/clip_text_encode.img resource/en_vocab.txt client [865] connected, module:ipu found 5 images! fillbuffer processing... img: 0.0212212 0.0622829 -0.0304767 [0] processing resource/retrieval_library/000000177958.jpg... fillbuffer processing... net input width: 224, net input height: 224 img model invoke time: 36.955000 ms [1] processing resource/retrieval_library/000000395432.jpg... fillbuffer processing... net input width: 224, net input height: 224 img model invoke time: 36.978000 ms [2] processing resource/retrieval_library/000000577364.jpg... fillbuffer processing... net input width: 224, net input height: 224 img model invoke time: 36.976000 ms [3] processing resource/retrieval_library/apple1.jpg... fillbuffer processing... img model invoke time: 36.992000 ms [4] processing resource/retrieval_library/apple2.jpg... fillbuffer processing... net input width: 224, net input height: 224 img model invoke time: 36.970000 ms img model post process time: 0.990000 ms resource/image/apple1.jpg, the image path is resource/retrieval_library/apple1.jpg, the score is 1.000000 resource/image/apple1.jpg, the image path is resource/retrieval_library/apple2.jpg, the score is 0.977727 resource/image/apple1.jpg, the image path is resource/retrieval_library/000000395432.jpg, the score is 0.325610 ------shutdown IPU0------ client [863] disconnected, module:ipu